Detailed description

If you're new to machine learning and TensorFlow, you would like to read MNIST for ML Beginners[1] and Basic Usage[2] to get a gentle introduction of handwritten digit classification (MNIST) and the usage of TensorFlow.

The following source code is from TensorFlow packages, using convolutional model. For better understanding, you could study Convolutional Neural Networks and Deep MNIST for Experts[3] before you go through the sample code.

All functions used below can be found at TensorFlow has APIs[4].

There are also some awesome materials[5], and videos[6][7] online.

Basic concept

TensorFlow is a programming system in which you represent computations as graphs. A TensorFlow graph is a description of computations. Nodes in the graph are called ops (short for operations). An op takes zero or more Tensors(multi-dimensional array), performs some computation, and produces zero or more Tensors.

To compute anything, a graph must be launched in a Session. A Session places the graph ops onto Devices, such as CPUs or GPUs, and provides methods to execute them. These methods return tensors produced by ops as numpy ndarray objects in Python, and as tensorflow::Tensor instances in C and C++.

Data Flow:

First import libraries you need and define some variables.

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import gzip

import os

import sys

import time

import numpy

from six.moves import urllib

from six.moves import xrange # pylint: disable=redefined-builtin

import tensorflow as tf

SOURCE_URL = 'http://yann.lecun.com/exdb/mnist/'

WORK_DIRECTORY = 'data'

IMAGE_SIZE = 28

NUM_CHANNELS = 1

PIXEL_DEPTH = 255

NUM_LABELS = 10

VALIDATION_SIZE = 5000 # Size of the validation set.

SEED = 66478 # Set to None for random seed.

BATCH_SIZE = 64

NUM_EPOCHS = 10

EVAL_BATCH_SIZE = 64

EVAL_FREQUENCY = 100 # Number of steps between evaluations.

Create some functions to download the data from Yann's website unless it's already here, and extract them.

def maybe_download(filename):

if not tf.gfile.Exists(WORK_DIRECTORY):

tf.gfile.MakeDirs(WORK_DIRECTORY)

filepath = os.path.join(WORK_DIRECTORY, filename)

if not tf.gfile.Exists(filepath):

filepath, _ = urllib.request.urlretrieve(SOURCE_URL + filename, filepath)

with tf.gfile.GFile(filepath) as f:

size = f.Size()

print('Successfully downloaded', filename, size, 'bytes.')

return filepath

def extract_data(filename, num_images):

print('Extracting', filename)

with gzip.open(filename) as bytestream:

bytestream.read(16)

buf = bytestream.read(IMAGE_SIZE * IMAGE_SIZE * num_images)

data = numpy.frombuffer(buf, dtype=numpy.uint8).astype(numpy.float32)

data = (data - (PIXEL_DEPTH / 2.0)) / PIXEL_DEPTH

data = data.reshape(num_images, IMAGE_SIZE, IMAGE_SIZE, 1)

return data

def extract_labels(filename, num_images):

print('Extracting', filename)

with gzip.open(filename) as bytestream:

bytestream.read(8)

buf = bytestream.read(1 * num_images)

labels = numpy.frombuffer(buf, dtype=numpy.uint8).astype(numpy.int64)

return labels

main:

Get the data:

train_data_filename = maybe_download('train-images-idx3-ubyte.gz')

train_labels_filename = maybe_download('train-labels-idx1-ubyte.gz')

test_data_filename = maybe_download('t10k-images-idx3-ubyte.gz')

test_labels_filename = maybe_download('t10k-labels-idx1-ubyte.gz')

Extract it into numpy arrays:

train_data = extract_data(train_data_filename, 60000)

train_labels = extract_labels(train_labels_filename, 60000)

test_data = extract_data(test_data_filename, 10000)

test_labels = extract_labels(test_labels_filename, 10000)

Generate a validation set:

validation_data = train_data[:VALIDATION_SIZE, ...]

validation_labels = train_labels[:VALIDATION_SIZE]

train_data = train_data[VALIDATION_SIZE:, ...]

train_labels = train_labels[VALIDATION_SIZE:]

num_epochs = NUM_EPOCHS

Feed the training samples and labels to the graph:

train_data_node = tf.placeholder(

tf.float32,

shape=(BATCH_SIZE, IMAGE_SIZE, IMAGE_SIZE, NUM_CHANNELS))

train_labels_node = tf.placeholder(tf.int64, shape=(BATCH_SIZE,))

eval_data = tf.placeholder(

tf.float32,

shape=(EVAL_BATCH_SIZE, IMAGE_SIZE, IMAGE_SIZE, NUM_CHANNELS))

tf.placeholder(dtype, shape=None, name=None) >

Placeholder: a value that we'll input when we ask TensorFlow to run a computation

- dtype: The type of elements in the tensor to be fed.

- shape: The shape of the tensor to be fed (optional). If the shape is not specified, you can feed a tensor of any shape.

- name: A name for the operation (optional).

These placeholder nodes will be fed a batch of training data at each training tep using the {feed_dict} argument to the Run() call below.

Assign variables:

The variables above hold all the trainable weights. They are passed an initial value which will be assigned when we call:{tf.initialize_all_variables().run()

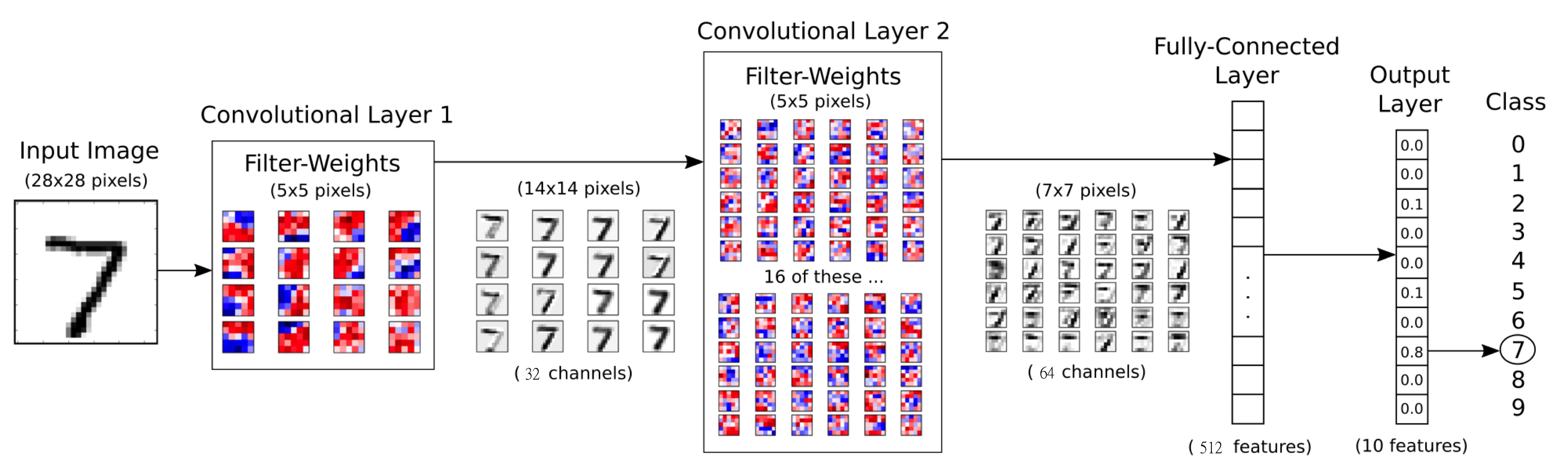

Convolutional Layer: It will consist of convolution, followed by max pooling.

First Convolutional Layer:

shape[5, 5, 1, 32]: one input channel, 32 features for each 5x5 patch.

Second Convolutional Layer:

shape[5, 5, 32, 64]: 32 input channels, 64 features for each 5x5 patch.

conv1_weights = tf.Variable(

tf.truncated_normal([5, 5, NUM_CHANNELS, 32], # 5x5 filter, depth 32.

stddev=0.1,

seed=SEED))

conv1_biases = tf.Variable(tf.zeros([32]))

conv2_weights = tf.Variable(

tf.truncated_normal([5, 5, 32, 64],

stddev=0.1,

seed=SEED))

conv2_biases = tf.Variable(tf.constant(0.1, shape=[64]))

fc1_weights = tf.Variable( # fully connected, depth 512.

tf.truncated_normal(

[IMAGE_SIZE // 4 * IMAGE_SIZE // 4 * 64, 512],

stddev=0.1,

seed=SEED))

fc1_biases = tf.Variable(tf.constant(0.1, shape=[512]))

fc2_weights = tf.Variable(

tf.truncated_normal([512, NUM_LABELS],

stddev=0.1,

seed=SEED))

fc2_biases = tf.Variable(tf.constant(0.1, shape=[NUM_LABELS]))

tf.Variable()

A variable maintains state in the graph across calls to run(). You add a variable to the graph by constructing an instance of the class Variable.

tf.truncated_normal(shape, mean=0.0, stddev=1.0, dtype=tf.float32, seed=None, name=None)

Outputs random values from a truncated normal distribution.

- The generated values follow a normal distribution with specified mean and standard deviation, except that values whose magnitude is more than 2 standard deviations from the mean are dropped and re-picked.

tf.constant(value, dtype=None, shape=None, name='Const')

Creates a constant tensor.

- The resulting tensor is populated with values of type dtype, as specified by ar guments value and (optionally) shape (see examples below).

model

2D convolution, with 'SAME' padding (i.e. the output feature map has the same size as the input). Note that {strides} is a 4D array whose shape matches the data layout: [image index, y, x, depth].

def model(data, train=False):

conv = tf.nn.conv2d(data,

conv1_weights,

strides=[1, 1, 1, 1],

padding='SAME')

tf.nn.conv2d(input, filter, strides, padding, use_cudnn_on_gpu=None, data_format=None, name=None)

- input: A Tensor of shape [batch, in_height, in_width, in_channels]. Must be one of the following types: half, float32, float64.

- filter: A Tensor. Must have the same type as input.

- strides: A list of ints. 1-D of length 4. The stride of the sliding window for each dimension of input. Must be in the same order as the dimension specified with format.

- padding: A string from: "SAME", "VALID". The type of padding algorithm to use.

- use_cudnn_on_gpu: An optional bool. Defaults to True.

- data_format: An optional string from: "NHWC", "NCHW". Defaults to "NHWC". Specify the data format of the input and output data. With the default format "NHWC", the data is stored in the order of: [batch, in_height, in_width, in_channels]. Alternatively, the format could be "NCHW", the data storage order of: [batch, in_channels, in_height, in_width].

- name: A name for the operation (optional).

# Bias and rectified linear non-linearity.

relu = tf.nn.relu(tf.nn.bias_add(conv, conv1_biases))

tf.nn.relu(features, name=None)

Computes rectified linear: max(features, 0).

- features: A Tensor. Must be one of the following types: float32, float64, int32, int64, uint8, int16, int8, uint16, half.

- name: A name for the operation (optional).

# Max pooling. The kernel size spec {ksize} also follows the layout of

# the data. Here we have a pooling window of 2, and a stride of 2.

pool = tf.nn.max_pool(relu,

ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1],

padding='SAME')

tf.nn.max_pool(value, ksize, strides, padding, data_format='NHWC', name=None)

- value: A 4-D Tensor with shape [batch, height, width, channels] and type tf.float32.

- ksize: A list of ints that has length >= 4. The size of the window for each dimension of the input tensor.

- strides: A list of ints that has length >= 4. The stride of the sliding window for each dimension of the input tensor.

- padding: A string, either 'VALID' or 'SAME'. The padding algorithm. See the comment here

- data_format: A string. 'NHWC' and 'NCHW' are supported.

- name: Optional name for the operation.

conv = tf.nn.conv2d(pool,

conv2_weights,

strides=[1, 1, 1, 1],

padding='SAME')

relu = tf.nn.relu(tf.nn.bias_add(conv, conv2_biases))

pool = tf.nn.max_pool(relu,

ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1],

padding='SAME')

Reshape the feature map cuboid into a 2D matrix to feed it to the fully connected layers.

pool_shape = pool.get_shape().as_list()

reshape = tf.reshape(

pool,

[pool_shape[0], pool_shape[1] * pool_shape[2] * pool_shape[3]])

Fully connected layer. Note that the '+' operation automatically broadcasts the biases.

hidden = tf.nn.relu(tf.matmul(reshape, fc1_weights) + fc1_biases)

Add a 50% dropout during training only. Dropout also scales activations such that no rescaling is needed at evaluation time. (more information about dropout[8])

hidden = tf.nn.dropout(hidden, 0.5, seed=SEED)

tf.nn.dropout(x, keep_prob, noise_shape=None, seed=None, name=None)

With probability keep_prob, outputs the input element scaled up by 1 / keep_prob, otherwise outputs 0. The scaling is so that the expected sum is unchanged.

- seed: A Python integer. Used to create random seeds. See set_random_seed for behavior.

tf.set_random_seed(seed)

Operations that rely on a random seed actually derive it from two seeds: the graph-level and operation-level seeds. This sets the graph-level seed.

Model return value

return tf.matmul(hidden, fc2_weights) + fc2_biases

Training computation: logits + cross-entropy loss.

logits = model(train_data_node, True)

loss = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(

logits, train_labels_node))

tf.nn.softmax_cross_entropy_with_logits(logits, labels, name=None)

Computes softmax cross entropy between logits and labels.

- logits: Unscaled log probabilities.

- labels: Each row labels[i] must be a valid probability distribution.

Add the L2 regularization for the fully connected parameters to the loss

regularizers = (tf.nn.l2_loss(fc1_weights) + tf.nn.l2_loss(fc1_biases) +

tf.nn.l2_loss(fc2_weights) + tf.nn.l2_loss(fc2_biases))

loss += 5e-4 * regularizers

Optimizer: set up a variable that's incremented once per batch and controls the learning rate decay (per epoch) using an exponential schedule starting at 0.01.

batch = tf.Variable(0)

learning_rate = tf.train.exponential_decay(

0.01, # Base learning rate.

batch * BATCH_SIZE, # Current index into the dataset.

train_size, # Decay step.

0.95, # Decay rate.

staircase=True)

Use simple momentum for the optimization

optimizer = tf.train.MomentumOptimizer(learning_rate, 0.9).minimize(loss, global_step=batch)

Predictions for the current training minibatch

train_prediction = tf.nn.softmax(logits)

Predictions for the test and validation, which we'll compute less often

eval_prediction = tf.nn.softmax(model(eval_data))

Small utility function to evaluate a dataset by feeding batches of data to {eval_data} and pulling the results from {eval_predictions}. Saves memory and enables this to run on smaller GPUs.

def eval_in_batches(data, sess):

"""Get all predictions for a dataset by running it in small batches."""

size = data.shape[0]

if size < EVAL_BATCH_SIZE:

raise ValueError("batch size for evals larger than dataset: %d" % size)

predictions = numpy.ndarray(shape=(size, NUM_LABELS), dtype=numpy.float32)

for begin in xrange(0, size, EVAL_BATCH_SIZE):

end = begin + EVAL_BATCH_SIZE

if end <= size:

predictions[begin:end, :] = sess.run(

eval_prediction,

feed_dict={eval_data: data[begin:end, ...]})

else:

batch_predictions = sess.run(

eval_prediction,

feed_dict={eval_data: data[-EVAL_BATCH_SIZE:, ...]})

predictions[begin:, :] = batch_predictions[begin - size:, :]

return predictions

Create a local session to run the training

with tf.Session() as sess:

Run all the initializers to prepare the trainable parameters

tf.initialize_all_variables().run()

Loop through training steps

Compute the offset of the current minibatch in the data. We could use better randomization across epochs.

for step in xrange(int(num_epochs * train_size) // BATCH_SIZE):

offset = (step * BATCH_SIZE) % (train_size - BATCH_SIZE)

batch_data = train_data[offset:(offset + BATCH_SIZE), ...]

batch_labels = train_labels[offset:(offset + BATCH_SIZE)]

This dictionary maps the batch data (as a numpy array) to the node in the graph it should be fed to.

feed_dict = {train_data_node: batch_data,

train_labels_node: batch_labels}

Run the graph and fetch some of the nodes

_, l, lr, predictions = sess.run(

[optimizer, loss, learning_rate, train_prediction],

feed_dict=feed_dict)

if step % EVAL_FREQUENCY == 0:

elapsed_time = time.time() - start_time

start_time = time.time()

print('Step %d (epoch %.2f), %.1f ms' %

(step, float(step) * BATCH_SIZE / train_size,

1000 * elapsed_time / EVAL_FREQUENCY))

print('Minibatch loss: %.3f, learning rate: %.6f' % (l, lr))

print('Minibatch error: %.1f%%' % error_rate(predictions, batch_labels))

print('Validation error: %.1f%%' % error_rate(

eval_in_batches(validation_data, sess), validation_labels))

sys.stdout.flush()

Show the test error:

print('Test error: %.1f%%' % test_error)

if FLAGS.self_test:

print('test_error', test_error)

assert test_error == 0.0, 'expected 0.0 test_error, got %.2f' % (

test_error,)

More information

Use with...Device statements to specify which CPU or GPU to use for operations:

with tf.Session() as sess:

with tf.device("/gpu:1"):

matrix1 = tf.constant([[3., 3.]])

matrix2 = tf.constant([[2.],[2.]])

product = tf.matmul(matrix1, matrix2)

...

- "/cpu:0": The CPU of your machine.

- "/gpu:0": The GPU of your machine, if you have one.

- "/gpu:1": The second GPU of your machine, etc.

If you have more than one GPU, the GPU with the lowest ID will be selected by default.

Using a single GPU on a multi-GPU system:

# Creates a graph.

with tf.device('/gpu:2'):

a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a')

b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2], name='b')

c = tf.matmul(a, b)

# Creates a session with log_device_placement set to True.

sess = tf.Session(config=tf.ConfigProto(log_device_placement=True))

# Runs the op.

print sess.run(c)

Using multiple GPUs:

# Creates a graph.

c = []

for d in ['/gpu:2', '/gpu:3']:

with tf.device(d):

a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3])

b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2])

c.append(tf.matmul(a, b))

with tf.device('/cpu:0'):

sum = tf.add_n(c)

# Creates a session with log_device_placement set to True.

sess = tf.Session(config=tf.ConfigProto(log_device_placement=True))

# Runs the op.

print sess.run(sum)